Invalidating Varnish Cache Without Performance Impact

How to perform cache refresh with no performance impact for high traffic websites.

May 17, 2019

Invalidating Varnish Cache Without Performance Impact

How to perform cache refresh with no performance impact for high traffic websites.

May 17, 2019

Flushing out the full Varnish cache can be tricky because it can cause *all* your actual visitor requests to hit your application server (such as Magento, Wordpress) leading to spike in server resource utilization. For high traffic websites, such a situation can last for hours before the Varnish cache is decently populated again to sheild the application server from the requests. And you face the same situation everytime you wish to perform a site-wide update.

The cache invalidation strategy detailed in this post overcomes this situation. It is based on my experience implementing cache invalidation at a couple of high-traffic websites leveraging Varnish caching.

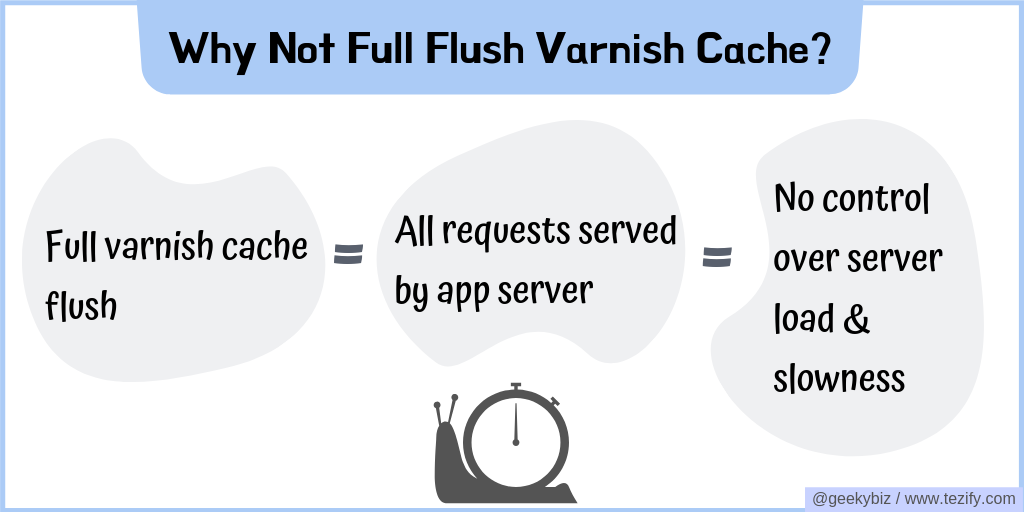

Do not Full Flush Varnish Cache

Flushing out entire Varnish cache is a bad idea because it causes all the user requests to be served by your application server. In such a situation, the amount of load exerted on your server depends on the number of visitors on your site at that time. This is troublesome because it means you have no control over the slowness & server load. You cannot do anything other than sit and wait for your Varnish cache to be filled again to serve requests. As a result, avoid full Varnish cache flush at all costs.

To stay in control of your website's performance and server load, avoid full Varnish cache flush.

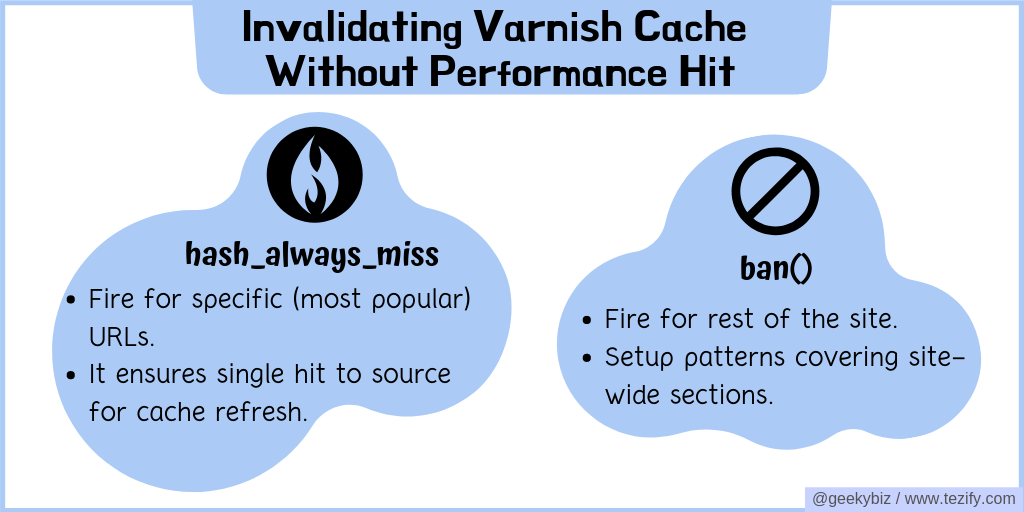

Leverage hash_always_miss for Popular Pages

Invalidating cache entries of a popular page (like homepage) for a high-traffic website can cause more than one requests for that page to be sent to application server. This can cause a spike in resource utilization affecting performance of the over-all website. Varnish provides hash_always_miss to overcome such a situation.

When a request is set to hash_always_miss within vcl_recv, Varnish fetches that request from backend even if it is present in Varnish cache. Once the new page is fetched and cached, all future requests for that page are served with the newer cached page. As a result, the total number of requests for a refreshed page that go to backend or application server remains at one. This allows us to perform a controlled refresh for specific URLs.

To make this work, your varnish config (typically at /etc/varnish/default.vcl) should have config to determine what requests should be set to hash_always_miss:

vcl_recv()

{

....

if (req.client.ip == "IP_Address_from_where_to_refresh" && req.http.varnish-cache-refresh) {

set req.hash_always_miss = true;

}

...

}

And then, you can issue HTTP requests to refresh specific URLs as following (notice the varnish-cache-refresh header in above configuration and below request):

curl -I 'URL_TO_REFRESH' -H 'user-agent: Mozilla/5.0 (iPhone; CPU iPhone OS 11_0 like Mac OS X) AppleWebKit/604.1.38 (KHTML, like Gecko) Version/11.0 Mobile/15A372 Safari/604.1' -H 'varnish-cache-refresh: True'

With request hash_alwayas_miss, you can refresh Varnish with fresh copy of a cached object by making a single request. This is especially useful for popular pages of high-traffic websites.

Leverage ban for Rest of the Site

A limitation of the hash_always_miss approach is that it can be issued for individual Varnish cache objects. This can be problematic for a website with thousands of pages or URL patterns since there's always a chance of missing certain pages or patterns. Also, hash_always_miss for larger sites can be slow. Varnish's ban() capability can be utilized for less popular pages and for specifying url patterns.

A ban request returns instantenously. This happens because a ban request instructs Varnish to add the pattern to it's ban-list. When a future request matches a cached object, it's recency is checked against the ban-list. If the cached entry matches the ban pattern and if it was cached prior to creation of the ban-list entry, Varnish fetches a fresh object from the back-end.

Since banned patterns are handled during actual request, site-wide bans should be avoided and ban patterns should be specified carefully.

Varnish's ban() returns instantenously and allows you to specify patterns that can match multiple URLs. This makes ban() suitable for invalidating cache for less popular pages relatively quickly.

Setup Time To Live (TTL) value carefully

Both hash_always_miss and ban do not actually remove the invalidated object from Varnish cache (like purge or full flush). So, when using these methods to invalidate cache, it is critical to set the TTL values carefully so that the invalidated objects do get deleted from cache eventually.

Varnish TTL is the time (in seconds) for which it caches an object. The default TTL of 2 minutes is hardly useful. However, setting TTL to a very high value (say 6 months) could cause large number of invalidated objects remain in the cache. An ideal TTL value would be slightly higher than your general frequency of cache refresh. If you expect to refresh your Varnish cache once every week, 2 weeks would be an ideal TTL value.

sub vcl_backend_response {

set beresp.ttl = 2w;

}

When using hash_always_miss and ban to invalidate cache, a time to live value that is slightly higher than the frequency of cache invalidation is ideal.

Conclusion

Setting up a flexible and powerful cache invalidation strategy is critical to continuous deployment with minimal impact on visitors' experience. Varnish's combination of cache invalidation options can help build a right invalidation strategy. Once established, it can be automated to incorporate into continuous deployment process. This enables leveraging Varnish's caching capabilities without cache invalidation penalties.